Archive

Apple should copy from Samsung

Apple’s IBM Moment’

The Innovation from Samsung that needs to be copied

Apple fans are quiet enthusiastic in their (sometimes blind) following of Apple. In this post I suggest the mantle is passing and Apple has its task cut out.

A historical analogy may be IBM in early days of computing which provided integrated hardware and software at robustness and quality that set the gold standard. You did not get fired for buying IBM. However with time the quality gap narrowed, competitors innovated a lot more and faster and the IBM premium faded….Microsoft was one such with a more open approach. Since many other were enhancing the hardware and the extensible Operating system and software it set a new standard. The Mac continued in the tradition of controlled systems. It took to USB late and Apple has been quiet circumspect in adopting new technologies like NFC preferring to be a laggard.

This has had advantages as too open a system leads to variations and some lack of robustness and varying user experience (the infamous Windows blue screen of death) . This has hurt Android. Google now is trying to reduce the variation (Android One) and Apple is opening up. For the first time iphone and ipad users can select third party keyboards and customize aspects of the UI that were given

Apple now carries the cachet IBM used to carry. Its adoption of oversized phones (phablets) has given legitimacy to that Samsung upstart and NFC may also get a boost with Apple Pay. This is good for customers. Competition is always useful in particular . There are several Android features that Apple has borrowed ( Active Notification, Cloud backup & Sync) and improved ( Siri over OK Google) . However competition does not stay still. OK Google (Google Now) is vastly better then Siri for Asian users. The voice recognition is superb and the action very close to intent. Siri falls flat a lot . It has to discover the rest of the World. Apples future growth depends on that.

I am an investor in Apple and love the fact it commands huge margin and has such growth.(Apple fans please keep buying) .

I wish to encourage Apple to shamelessly copy two features from Samsung Note lineup. I use Note 2 smart phone and Note Tab 10.1 2014 edition and they are way ahead of ipad. And it’s the software and productivity boost that makes it so. .Apple still provides a crisper and smoother experience but these phones are close very close. Difficult to justify Apple premium after you experience the productivity boost. I admit that my usage may not be typical. I am a post PC user. I spend more time with these devices. I want to do more than just consume. I believe this is a large enough segment to matter and will become larger. The phablet emerged to meet needs of users who do much more than just talk and listen to music and share photo so more and more users will want to create. For example I prefer to draw my mind-maps on the tablet

.Mindjet on the touch tablet is far easier and fun compared to desktop.

S pen A digitizer masquerading as stylus

The S pen is not a stylus in the way Steve Jobs thought about it. Its actually a digitizer and pretty good. The digitizer is so good Adobe AutoCad360 shines. Photo artists can do very precise work.The Keyboard can support handwriting input in any application. It takes your input and converts to text. However I am not a big fan of that as a bit slow still but improving and will be natural within 2 years.

I have back and forth with web page designers. Incredibly great to take out the S pen and annotate the web page with exactly the changes needed. Very quick and precise. You can mark up anything and this does not depend on support from the third party application. See an annotation of an article from the FT App. ( I trust their lawyers will not come after me for copyright …) .

The native S Note application has slick features . You can sketch diagrams, write mathematical equations and have them converted /cleaned up. Does a very good job.

I have stopped using paper and takes notes of meeting and things I am studying . Papyrus with S note is a full replacement of paper notebook plus you can embed graphics and screen shots as well. As a bonus your notes and notebooks are backed up on the cloud.

Multi Window

Another feature is multi window support . This needs genuine multitasking OS. IoS has a pseudo multi tasking feature and is being reworked. On a desk top I can open a Powerpoint and review it slide by slide adding comments on a email reply window on the side. This is just not possible on a Tablet. However Samsung Note Tab 10.1 supports this and its very productive. A favorite activity I have is collecting interesting usage of connected devices (IoT) and then updating the IoT forum that TiE runs.

I have dedicated modes where Evernote and browser open up in side by side mode so I can collect items as I browse and then I can curate and post to the IoT community . Another nifty feature pen window lets you open a application like a calculator in a small window. Can use it to do quick checks.

Copy contact info is a common one for me.

Aside this is the major failure of Windows 8. Trying to impose the modal window of phones on a desktop.

Multi Window is coming to Android and should make it to iPhone.

A/B Testing at scale

I really appreciate that Samsung has stuck to the Note and iterated with different formats and different features in the software. The S pen applications have benefited from large scale A/B testing across multiple devices and feature set. This again is different from the button down process previously used at Apple . There was little end user input and more “My Way or the Highway” of Steve Jobs…That too is changing .

About time…The cycle of innovation and consumer good is well served …

Agility Flexibility For Software Product

Agility and Flexibility

[ There is an interesting discussion in ProductNation on Customizable Product : An oxymoron which triggered me to write this post]

I find many people use these words interchangeably. However the distinction is important and source of value. Agility can be engineered with proven techniques and best practices. With advancement in software engineering there is convergence on the tools and techniques to achieve agility and it may no longer be a source of differentiation but a necessary feature. Flexibility on the other hand requires deep insight into diverse needs and types of usage and done imaginatively can provide great value to users and be a source of competitive advantage.

|

The online Merriam-Webster dictionary offers the following definitions: Flexible: characterized by a ready capability to adapt to new, different, or changing requirements. Agile: marked by ready ability to move with quick easy grace.

|

In Software product context it may be more appropriate to define agility as the ability to change things quickly and Flexibility as the ability to achieve a (higher) goal by different means. So ability to value Inventory by different methods like FIFO or LIFO or Standard cost is flexibility. The ability to change commission rate or sales tax rate quickly (generally by changing a parameter in a table of a file and avoiding need to make code changes) is agility.

There is a range or scale ( 1 ..10) of agility. Making changes in code esp 3rd generation languages like Java, C# is the baseline. Using a scripting language like Javascript , Python may be less demanding then compiled language but it is still not as agile as most users expect. Most users would rate it simpler to update a parameter in a file or a simple user interface like a Excel type spreadsheet as simpler and faster. Changing logic by using a If-Then type of rule base is in-between. Simpler then coding but more complex then table updates.

You should have picked up some inter-related themes here. Complexity or need for skill in making changes to a scripting or Rule engine . Need for easy to use interface to make the changes. Need for relatively easy and error free method of making changes. If you need to update ten parameters in ten different places or change 17 rules in a If-Then rule base it may not be as simple and is less agile .

Most modern Software products are fairly agile with scripting, table based parameters and rule driven execution engine. The use of style sheets in generating personalized User interface, specialized components like Workflow or process management engines etc also provide agility.

Flexibility is a different beast. Layered architectures and specialized components for workflow, rule execution and User experience can make it easier to accommodate different ways to do the same things. They make it easier to make the necessary changes but they do not provide flexibility as such. End users do not value the potential flexibility of architecture but the delivered functionality. This is a major problem. Even Analysts from Gartner , Forrester do not have a good way to measure flexibility and use proxy measures like number of installations or types of users ( Life Insurers and Health Insurers or Make to order or Make to Stock business).

Flexibility is derived from matching the application model to the business model and then generalizing the model. I will illustrate with a concrete example. Most business applications have the concept of person acting as a user ( operator) or manager authorizing a transaction. So a clerk may enter a sales refund transaction and a supervisor may need to log in to authorize this refund. The simplest ( and not so flexibly) way to implement this is to define the user as clerk or supervisor. A more sophisticated model would be to introduce the concept of role. A user may play the role of clerk in a certain transaction and a supervisor in another. Certain roles can authorize certain types of transactions with certain financial limits. So user BBB can approve sales refund up to 100,000 INR as a supervisor. However BBB as manager of a section can initiate a request for additional budget for his section. In this BBB is acting as a clerk and DDD the General Manager who approves the budget extension request is acting as a supervisor. This model is inherently more flexible. It is also not easy to retrofit this feature by making changes to parameters or If-Then rules. If the Application does not model the concept of Role all the agility in the product is of little use. Greater flexibility can be produced by generalizing the concept of user to software programs. So in certain high volume business a “power user” can run a program which can automatically approve a class of transaction under certain set of control parameters. This “virtual user” is recorded as the approver on the transaction. The application keeps a trail of actual control parameters and power user who ran this “batch”.

Flexibility comes from a good understanding into user’s context and insight into their underlying or strategic intent. Business and users will vary the tactic used to meet their intent based on context. Consider a simplistic example to illustrate. I need to urgently communicate some news to a business partner. I try calling his cell but to no use. I would send a SMS ( India) but if a US contact ( who are not as yet fans of SMS or Text) leave a voice message on his phone or send a email. A Smartphone that allows me to type a message and send as SMS or email is more flexible then one where I have to separately write the email or the SMS.

Frequently Software designers and Architects generalize all types of changes as extensions. They are not interested in understanding intent and context of usage and want a horizontal generic solution to all changes. This leads to overly abstract design with extension mechanisms to change logic, screens and database and reports. In essence to develop a 4th generation programming environment or Rapid Application Development Environment (RAD) . These help by making programming easier and faster but are no substitute for understanding user’s context and designing the application architecture to match and exploit them.

Users relate to a product which speaks their language and seems to understand them. They also get excited and impressed when they see new ways of doing their business and improving their revenue, reducing cost and improving customer satisfaction. Most managers want to make changes incrementally. So ability to “pilot” changes to a smaller segment of users, customers and products while continuing in traditional way with the larger base is of great value. That is ultimate flexibility.

Flexibility is not a mélange of features haphazardly put together. I have seen many service companies developing “Flexible Product” by adding every feature they can see in other offerings. Invariably this leads to a mythical creature which does not work. If you put a Formula 1 Race engine in a Range Rover chassis with a Nano steering and Maruti wheels you have a car which does not drive!! A Formula 1 car is intended for maximum speed and acceleration that is safe while a goods carrying vehicle is intended for maximum load carrying with optimum cost of fuel usage .

Delivering Flexibility requires heavy lifting in developing a good understanding of user’s domain, their intent and context. It adds value by simplifying the feature set and matching users intent and context.. If we can use our imagination and understanding of technology trends and capability to provide more then users have visualized we can lead them to newer ways. Leadership is an important way to differentiate your product. Invest in doing this and reap benefits.

Analytics in the large Solvency II

Solvency II is a European Union Insurance specific regulation similar to BASEL III for Banks. It is also a quantum jump in the art and science of macro economic forecasting and imposes a level of precision with complexity that will be a challenge for all. While delayed in implantation the ongoing economic crisis and the debate over more austerity or more government funded stimulus highlights the conflicting needs of precision ( 99.5% certainty level) and robustness ( under dramatic different scenarios) .

I authored a Point of View for a client some time back. Read the original for the full implications. The paper is a deep dive. You are forewarned….

Insurance Policy Adminstration a new Architecture thru Product Server component

I co-authored a thought leadership article some time back on a new way to architect Insurance Policy administration system. This approach fits in well with the new BPM and rules based approach and is especially important for multi brand and multi line Insurers.

Read the original for the full details.

Views from an Insurance Buff on the Great Meltdown

A Catastrophic event in the Capital Markets aka: The Credit Crisis

Like a rock thrown into a tranquil pond, the subprime mortgage meltdown that kicked off in early 2007 and transformed into a much broader credit market event has spread ripples in ever-widening circles over the intervening year. Yet, unlike the proverbial pebble tossed in a pond, the intensity of the effects seems to have grown as they spread wider and wider. The repercussions have included mounting losses, growing provisioning against losses, big markdowns of mortgage-related securities, falling capital ratios and plummeting share prices.

Since last summer, fear and panic have repeatedly roiled global markets, striking blows at private-label mortgage-backed securities (MBS) and related collateralized debt obligations (CDOs) along with related derivatives, asset-backed commercial paper ( ABCP) and, finally, structured investment vehicles (SIVs).

This post attempts to take a broad brush and helicopter view of the landscape and approaches this event like a catastrophic event with multiple effects. Much like an Earthquake has direct losses but also leads to flooding (Ruptured mains and overflowing lakes), fire ( broken power lines ) and trauma ( Children especially)…

By now the markets have moved from a stage of denial on the scale and duration of the credit crunch. Acceptance is yet to come. The initial upbeat statements that this was a USD 40-50 Billion 1 quarter slowdown that emerged Q3 2007 have given way to larger and more sober estimate. While JPMC, Goldman Sachs and Bill Gross of PIMCO ( A heavyweight Bond player ) estimated between 300-600 Billion of mark to market notional losses the International Stability Forum under the Bank of International Settlements ( BIS) came up with a humbling number of USD 945 Billion. See Side bar below quoting from Forbes note on this.

Most analysts would agree on the three main drivers of the broad credit crisis:

1) Asset Inflation in terms of a sustained boom in Housing prices and other (irrational?) exuberance

2) Lack of Trust between financial counterparties. The musical chair which was the loan spiral where originators sold loans to other who packaged and repackaged them in increasing opaque structures came to a crashing end as the largest financial players stopped trading with each other fearing the liquidity and solvency of their counterparty..

3) Fraud. Already financial institutions (supposedly astute buyers and sellers) are suing each other and lawyers are preparing for a decade of lawsuits. There is plenty of evidence that underwriting standards slipped and slipped a lot in this spiral. There is a lot of speculation that the quarterly financial statements of many banks were wrong and probably deliberately optimistic. Some commentators have seen shades of the Enron saga here. In this view of the events Enron was less an energy trader and more a package-trader of structured products derived from energy asset prices and suffered a bank run

The financial press has picked up Asset Inflation as a cause of the current crisis and similarity to Japan where the ultimate cost of trying to keep banks afloat were close to zero interest rate for years ( even today 0.75%) and a estimated cost of USD 4,000 Billion or 120% of Japan’s average annual GDP over 16 long years.

The financial press has picked up Asset Inflation as a cause of the current crisis and similarity to Japan where the ultimate cost of trying to keep banks afloat were close to zero interest rate for years ( even today 0.75%) and a estimated cost of USD 4,000 Billion or 120% of Japan’s average annual GDP over 16 long years.

What has not yet been picked up is the similarity to the German re-unification in the Lack of Trust and consequent liquidity issues as a cause of the current crisis . When the German Chancellor set the rate of exchange rate between the erstwhile East German currency as 1 : 1 and accepted the assets of the enterprises at their book value he set into motion a huge game of double and triple guessing. Communist Germany did not have much of audited financial statements so many of the mergers (like Allianz buying out the East German Post offices) occurred under opaque conditions. It is estimated that it cost over 2,400 Billion USD over 10 years to restore confidence ie close to 100% of Germany’s average annual GDP over 10 years .

Fraud as the proximate cause and the similarity of the derivates based crisis to the London Excess of Loss Spiral (LMX Spiral) in Lloyds of London has not yet been picked up. Lloyds had a loss of USD 4 Billion over 8 years( starting in the late 80’s) from a core fraud of USD 20 Million.[These are anecdotal numbers from personal sources for Lloyds There is a RAND monograph speculating on the Cost of Korean Re-unification]

The similarities of LMX as derivates and the use of leverage as well as opaque accounting and lack of regulation are very close to the current crisis, almost a prototype . I will expand on this similarity and the reasons for projecting the ultimate net cost of the crisis at over 2,500 Billion and a 4 year development. My guess is the worst is yet to come. Until Insurers who are ultimate holders of much of the credit risk in their bond and pension portfolios start showing severe pain we are still at the beginning of the cycle. AIG has already shown the way and Swiss re in a pre emptive arrangement has secured line of credit equivalent to 20% of its portfolio from Warren Buffet’s Berkshire Hathaway.

Now some of the common features of the credit bubble and LMX Spiral. [ I am not suggesting that Credit Default Swap CDS or other markets are Insurance markets. I am pointing out structural similarity in governance and risk reward that lead to abuse and bubbles…]

Derivatives with Leverage

Excess of Loss ( XL) is a specific type of Reinsurance contract. It is a derivative covering a ceding Insurer ( the buyer of the XL Contract) who buys protection on a specified portfolio of direct insurance contracts from the reinsurer ( the seller of the contract). It has a floor ( Deductible) below which losses suffered by the buyer are not covered and a cap or Limit above which the seller will not pay for losses of the buyer. Thus if National Insurance (NI) is insuring Reliance’s Jam Nagar refinery it may buy a XL contract from GIC for 100Mill x 500 Mill. Thus if there is a covered event like a fire at Reliance’s Jamnagar project and if NI has claims from Reliance exceeding 100 Mill USD then it can call upon GIC to pay those claims ( 100%) up to 500 Mill ie USD 600 Mill looses “from ground up” (FGU) at Jamnagar . XL can be layered similar to tranches in a structured product like Asset Backed Securities ( ABS) . Thus NI can buy a second layer from Swiss Re for 600 x 600 Mill FGU ( From Ground Up) or 0 X600 on Layer 1 so that it is protected up to 1.2 Billion USD losses. Thus in a loss event where losses are up to USD 1.2 billion NI only will have to pay the first 100 Mill. The rest will be recovered from its re insurers 500 Mill from GIC and 600 Mill from Swiss Re. Rates on XL are pretty cheap and upper layers get cheaper running between 100 to 10 basis points.

Thus we can see the derivative nature of XL. It is based on a collection of direct financial instruments (the original policies between insurer and policyholders). It has leverage. Typically the Ceding Insurer will be able to cede 90% of his risks away and only retains 10% so a 10 X leverage. It has call and put option type features.

Credit derivates allow more leverage. It is not unusual in the heady days to have a 100 X exposure. This is 10 times more than Insurance markets

Unregulated Entities with Opaque Accounting and wide global participation

Lloyds of London was set up by a private act of Parliament The Lloyds Act 1871 was updated in 1982. It was only in late 2000 that it was brought under the ambit of the UK regulator the FSA. So during the period of the LMX Spiral ( late 80’s) there was no regulator apart from the Society. Lloyds uses a syndicate structure much like a exchange where members who trade have their own books. Each Syndicate has a agent who manages it and members who provide the capital of the syndicate which backs the risks underwritten by the syndicate. Syndicates used to publish a 3 year rolling account ( the famous non GAAP development accounting) and Profit and Losses for a trading year were finalized 48 months later.

At the base of the credit crisis (more accurately the subprime credit crisis) were the mortgages, packaged into Mortgage Backed Securities (MBS), in turn aggregated into Collateralized Debt Obligations CDOs and CDO-squared. Where they went off balance sheets was in the last stage of CDOs being bundled into multi-layered ABCP (Asset Backed Commercial Paper) issued by SIVs ( Special Purpose Vehicles) and backed by AAA Ratings!!

At this level there was lack of transparency on assets and liabilities valued by opaque models since there are no directly observable prices in the market most of the time as well as severe tenure mismatch (short-term MM borrowings funding long-terms structures) leading to the original liquidity crisis. Both were global and had numerous large and small participants from all parts of the world. We have already learnt at the surprises in Germany at the exposure some of the banks had to the sub prime. We are now beginning to hear of the exotic products bought by Indian corporate

Trading Chains

Re Insurance is global business where reinsurers seek diversification to build a balanced book. Even under normal times there are multiple chains of reinsurance and it is not unusual to have the base risk ( the Jamnagar Factory) pass thru 4-5 parties as each re insurer in turn buy re insurance to protect them-self. This is exactly similar to the capital market players. So while JPMC may have swapped a USD 300 Mill Fixed Interest rate Infrastructure bond for variable LIBOR + 1% Bond it would somewhere else turn around and shed some of the variable rate risk by have a Fixed rate Bond in some other swap. This complex web of contracts makes valuing the ultimate net exposure difficult.

In the LMX Spiral the courts finally got into the act and it was a horror to the market participants to find the chain extending 10-12 deep and most funnily due to aggregation and slicing and dicing of the portfolio quiet often the Insurer has some of his own policies ultimately re insured by himself !!

Capital Markets have similar characteristic. In the early days of this crisis ISDA ( International Swap Dealers Association) estimated the nett loss after netting out counterparties at USD 43 Billion ( Bill Gross of PIMCO’s had estimated of USD 400 Billion of losses). It is worthwhile to note that some observers call this Notional mark to market write downs as they assume the markets will go back after some time. Actuary’s had a long run in with the BASEL II regulators on this. Most pension assets and liabilities are valued at long term interest rates and not adjusted (mark-to-market) for “temporary” market crashes. However they lost the argument and regulators and IFRS ( International Financial Reporting Standards) proposes mark-to-market requirements.

Insures use the term Ultimate Net Loss (UNL) to allow for credits from prior layers of Re insurers and from one’s own re insurers as well as recoveries from scrap and subrogation ( claims from related parties for damages) . It generally takes 8-12 years to fully settle a large claim like a major storm or an earthquake. Settlement cycles are quarterly but Cedeants can make cash calls and get part payments much before the ultimate costs are clear. In the capital markets settlement cycles will be much faster but there is no pre payment. So cash flow or liquidity problems abound even if you have protected yourself by counter swaps. As a Banker noted “… merrily because the river is on average 4 feet deep does not mean we cannot sink in the deep end….”

Greed ,Musical chairs and nonexistent controls and Fraud

At the peak of the LMX spiral Insurers were charging vanishing small rates for taking on risks which they then traded away in their re insurance. Brokers were selling the same pool of risk many times over as Syndicates re insured themselves . In one case properly dissected in the courts after the full 12 cycles of trading were laid out a full 40% of the premiums had gone into commissions. Similarly in Capital markets the risk spread had narrowed considerably ( Credit default swaps at 28 basis points before the crisis compared to 100 bps+ now) but the churning of the trades generated fat margins for the traders.

The Underwriters at a syndicate are supposed to scrutinize the risk and decide the percentage they will take and at what rates. Most were co opted in this scheme. They were incentivized by Sum Insured they wrote and not the profits ( it anyway took three years to “know ” the profits). Some of the leading lights who actually set the benchmark in rating a risk were co-opted by side deals where the broker passed “soft money” to them. This is a complex story and the old boy’s club nature of Lloyds business model and the reason why Lloyds has a dis-investment clause in its constitution today. The parallel to this is the rating agencies like Moody’s making so much money in rating the structured products and become a trifle lax about the risks inherent in these structure. In retrospect it is obvious that a bundle of junk mortgages cannot be AAA merrily because it has been wrapped into an aggregate from a more well rated company ( Municipal Bond Insurers) .

Fraud sent the heated LMX spiral market crashing. It transpired that in a number of cases there were no real risks out there. Similarly the catalyst of the credit crisis was the fraud in lenders trying to pass on bundles of loans which were not really underwritten or backed by any assets or earnings.

Concentration in a few players

To bring these parallels alive I quote from a specialist lawyer describing the LMX Spiral

It is well known that, in general terms, a spiral effect occurs when excess of loss reinsurers extensively underwrite each other’s excess of loss protections. This leads to a transfer of exposures to the same players within the market rather than their dispersal outside the market. The effect of the transfer of exposures was starkly demonstrated by the series of losses which occurred between 1987 and 1990, including UK Storm 87J, the loss of Piper Alpha, and Hurricane Hugo:

- the catastrophe losses were concentrated in a handful of specialist excess of loss syndicates within Lloyd’s rather than evenly dispersed in the market. The four Gooda Walker syndicates, for example, sustained 30 per cent of the entire market’s losses in 1989.

- because the losses were so concentrated, and there was so little “leakage” from the spiral by way of retentions and coinsurance, the catastrophe losses incurred by these specialist syndicates spiralled up and out of the top of their reinsurance programmes. For example, Gooda Walker syndicate 290 suffered a gross loss of US$385 million in respect of Hurricane Hugo against reinsurance protection purchased of US$170 million

- the catastrophe losses had a high ratio of gross to net loss, reflecting the extent to which the losses spiralled. For example, on a gross basis the Piper Alpha loss exceeded by a multiple of 10 the net loss that was covered on the London market.

- traditional methods of rating, whereby the lower or working layers have a higher rate on line than the higher layers to reflect their greater exposure to losses, had no relevance because the higher layers of insurers protections were not significantly less exposed than the lower layers. In the litigation that followed the catastrophic losses, in which the Names on the LMX Syndicates accused their underwriters of negligence, the traditional rating methods adopted by the excess of loss underwriters were revealed as inappropriate to deal with the effect of the spiral.

Those knowledgeable about capital markets trading pattern would know that JPMC held over 40% of default swaps and would have been badly hit if Bear Sterns went under. Similarly most hedge funds have taken funds from the prime brokerage units of these large banks. So in realty there is an enormous concentration of risks within the top 20 global players. The Fed simply would not afford to let a relatively small Broker/Investment bank like Bears Stern fail. Our RBI Governor Mr Y. V Reddy noted in a CNBC interview that what was amazing about the current crisis that the top 20 financial players in the world do not trust the solvency or liquidity of each other. Even more telling is that the RBI and many other central banks have quietly shifted their money market funds ( investments) out to other central banks.

So How bad can it Get?

If we use the LMX Spiral as a model then the maths runs as follows:

For the LMX Spiral ultimate loss of 4 Billion on core fraud losses of 20 Mill or a 200X multiple. Capital markets are 10X more leveraged and assuming core loss is 2 Billion that means gross losses around 4,000 Billion. The International Stability Forum reduced the estimated gross loss of 4,000 billion to a optimistic 1,000 billion assuming liquidity and solvency assistance from central banks. As of now financial institutions have declared around 200 Billion of losses as of 1 April 2008 as tabulated by Bloomberg and plan to raise around 60 billion in new capital.

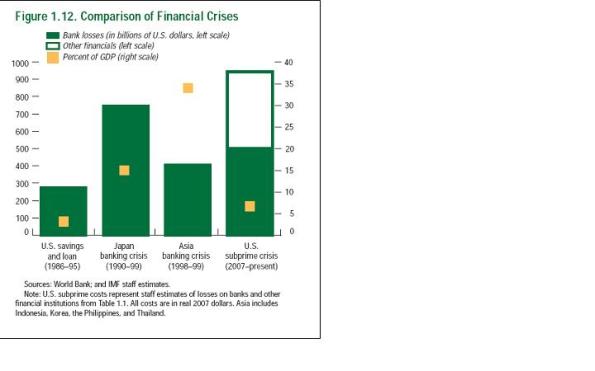

Japan and Germany averaged 10% of GDP as the pain over 10-16 years. Given a 12 Trillion US economy that implies USD 120 Billion per year from quite some time. However just like the savings and loan crisis the US may clean up the mess faster accelerating the losses. Both the IMF and the UK Prime Minister have suggested that the large banks are holding back the real extent of their losses. The consensus estimate is 800 Billion over 4 years or around 20% of the US GDP per year. The IMF estimates this at over 35% of US GDP. See chart .

The fly in the ointment may be the fact that we must keep in mind that Bankers as yet have not started thinking in terms of Maximum Possible Losses (MPL). Banks and much of BASLE II framework is currently skewed towards trading losses. These are gyrations in market values that occur every trading day. Under normal market conditions these fluctuate in small ranges ( 2% for US equities) over a long term trend say an upward growth of around 4-5% real rate after inflation . However what we are witnessing is not normal trading event but a catastrophe event. Much like an Kobe earthquake or a Katrina it will take a fairly long time to see the full costs and the side effect or collateral damage is more expensive then the main loss.

Insurers have had a long history of dealing with catastrophes and have done a reasonable job of estimating ultimate costs . Thus two week after 9/11 insurers were estimating 30-50 Billion direct and another 30-40 billion indirect costs or 60-90 Billion overall. Seven years later the current estimates are a total loss of around 70-90 Billion. It will take a decade for the final sums to be done but the pattern is fairly well established and the net loss is unlikely to exceed 110 Billion.

To contrast HSBC signaled the onslaught of the current credit crisis in Feb 2007 and even as late as October 2007 most bankers were a bit smug about the assumption that this a mere sub prime crisis and will not touch prime and other lending’s . Unfortunately it has and now the real economy is also feeling the heat thru surging inflation.

Insurers distinguish between two concepts. Possible Maximum Loss ( PML) and Estimated Maximum Loss(EML). So in a normal fire or a flood ( 1/5 year event) it is unlikely the whole building will be become useless. Maybe three or four floors near the fire or the ground will be unusable but the rest of the high rise multi tenanted building may be functional. This is EML or losses under normal loss expectancy (Trading losses in capital markets) or maximum foreseeable losses for optimistic traders, managers with short memory ( 180 days trading only !). However under an extreme event ( Great Depression, Katrina, 1/250 year event ) there may be multiple causes of losses. Property, People, Third party and the loss can escalate. For example a fully loaded Jumbo passenger airline represents a possible maximum loss ( PML) around USD 1,000 Million even though the aircraft or hull may be only 70 Million at most. Similarly an earthquake in the Bay area can have PML estimates which are terrifying large and significant compared to the GDP of the US Economy. Capital market players are only now beginning to accept that correlations among asset classes changes under extreme conditions. Thus while geographical diversification reduces risk for normal trading a global meltdown sees all equity markets from Japan, Hong Kong , India and UK lock step with wall street. Consequently Value at Risk (VaR) calculations go terribly wrong and capital requirements end up being underestimated…

Insurers especially reinsurers like Swiss Re and Munich Re tend to think a lot in terms of extreme events and the conditional losses given that extreme events have happened. These are no longer based on probabilities of normal distribution. The banking and Basel II modelers as well as rating agencies are now waking up the need to use expected tail Loss( ETL) in their models for conditional VaR rather than adjusted normal distributions like GARCH . Actuaries may finally be able to get the voice they lost out to stochastic modelers…( but that is another story).

Coming back to the Stability Forum gross estimate of 4,000 Billion is itself optimistic and the publicly announced estimate of USD 1,000 billion is the Minimum Probable loss not the Maximum. That is why this great meltdown may be a seminal event like the great depression creating new institutions and changing old ones….

What does it mean for IT Offshorers ?

Providers are already acting on some obvious consequences. Cost control, better utilization etc. I think there are three points that may be more important

Customers may not be able to predict their Budgets well

Providers are used to working in close partnerships and depend a lot on customer guidance. Providers may need to qualify their estimates as they are also operating in un-familiar terrain

Balanced Portfolio .

Companies need to have more revenue from Non US and non BFSI segment.

Non Intuitive “Bet the Farm” reactions

Conventional wisdom would suggest that AVM contracts are secure. This may not be true. In a previous downturn with a Japanese Insurer the experience was different. During the collapse they cut large AVM projects, went without AMC from IBM on their Mainframes and continued to work with a de supported version of Oracle. However they commissioned a bet the farm type of AD /BPO project setting up Internet self service and massive call centre. These were transformational projects and they negotiated a profit sharing or Fee per transaction processed rather than pay per FTE month.

So providers may miss a bet if they focus on cost reduction part of the equation alone. They should be up there in transformational revenue generating projects with substantial skin in the game…